Abstract

The aim of this paper is to estimate the six-degree- of-freedom (6DOF) poses of objects from a single RGB image in which the target objects are partially occluded. Most recent studies have formulated methods for predicting the projected two-dimensional (2D) locations of three-dimensional keypoints through a deep neural network and then used a PnP algorithm to compute the 6DOF poses.

Several researchers have pointed out the uncertainty of the predicted locations and modelled it according to predefined rules or functions, but the performance of such approaches may still be degraded if occlusion is present.

To address this problem, we formulated 2D keypoint locations as probabilistic distributions in our novel loss function and developed a confidence-based pose estimation network. This network not only predicts the 2D keypoint locations from each visible patch of a target object but also provides the correspond- ing confidence values in an unsupervised fashion.

Through the proper fusion of the most reliable local predictions, the proposed method can improve the accuracy of pose estimation when target objects are partially occluded.

Experiments demonstrated that our method outperforms state-of-the-art methods on a main occlusion data set used for estimating 6D object poses. Moreover, this framework is efficient and feasible for realtime multimedia applications.

Experiments

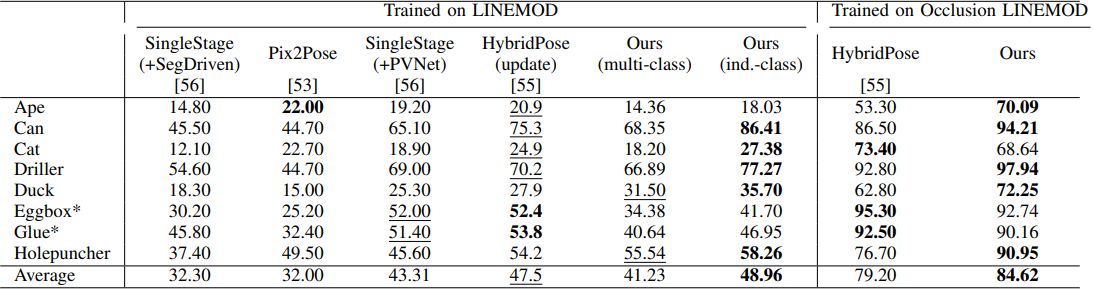

We compared our approach with state-of-the-art methods in which a single RGB image is used as the input, including SingleStage(+SegDriven) [12], [56], Pix2Pose [53], SingleStage(+PVNet) [13], [56], and HybridPose [55]. The proposed method can estimate object poses for multiple classes simultaneously while retaining reasonable accuracy.

Results of the proposed and comparative methods are shown below. Please refer to the manuscript and supplementary files for more results and comparison.

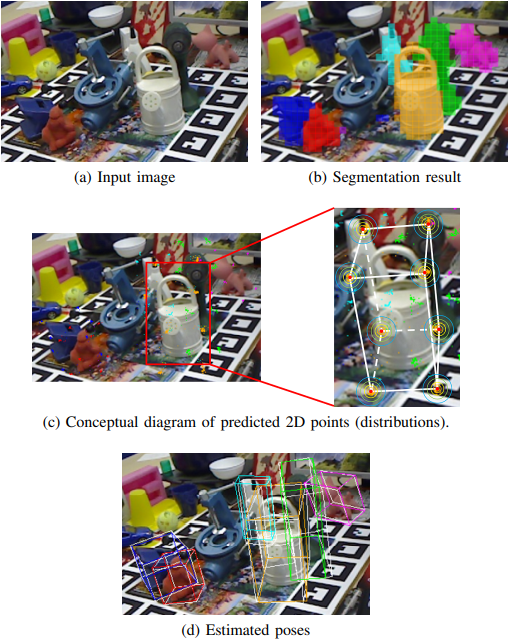

Figure. Qualitative results of our method on the Occlusion LINEMOD dataset.

White bounding boxes depict ground-truth poses and the bounding boxes in other colors depict our predicted poses of different classes.

Table. Comparison of the proposed method with state-of-the-art methods in terms of add(-s)-0.1d, tested on the Occlusion LineMOD dataset.

Publication

Wei-Lun Huang‡, Chun-Yi Hung‡, I-Chen Lin*, "Confidence-based 6D Object Pose Estimation," IEEE Trans. Multimedia, 24: 3025-3035, June, 2022. (SCI, EI)

Paper:

preprint_version (about 12MB), published version (link to the IEEE digital library)

Supplementary file:

TMM21_supplement (pdf, about 16MB)

BibTex

@ARTICLE{HuangTMM22,

author={Huang, Wei-Lun and Hung, Chun-Yi and Lin, I-Chen},

journal={IEEE Transactions on Multimedia},

title={Confidence-based 6D Object Pose Estimation},

year={2022},

volume={24},

number={},

pages={3025-3035},

doi={10.1109/TMM.2021.3092149}

}

Acknowledgements

The authors would like to thank Yu-Lun Wang and other CAIG lab members for their assistance in experiments. This work was partially supported by the Ministry of Science and Technology, Taiwan under grant no. MOST 109-2221-E-009- 122-MY3.